Model evaluation

Model evaluation ensures that the model’s performance is not biased by the training data and that it can generalize to new and unseen variants * Predict the pathogenicity of each variant in the independent dataset (variants not included in the training dataset)

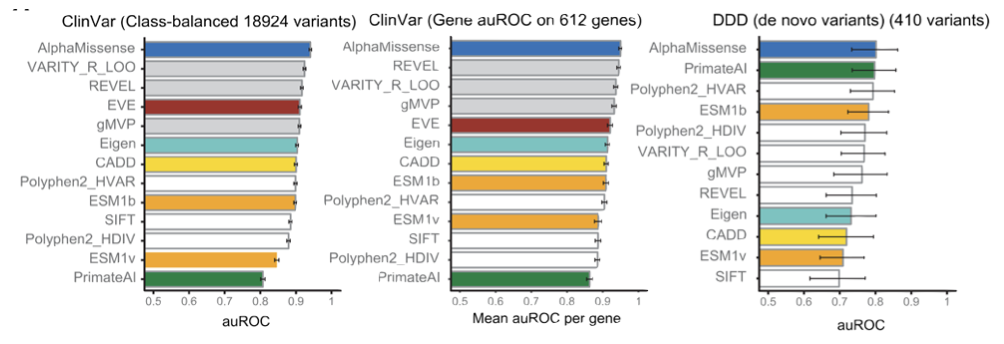

AlphaMissense model is evaluated using multiple clinical benchmark datasets

ClinVartest set,De novo variantsfrom rare disease patients,Multiplexed Assays of Variant Effect- experimentally validated data

Note

Performance evaluation matrices: Metrics used to evaluate the performance of a model

Accuracy

Percentage of correctly predicted disease-causing or benign missense variants out of all missense variants

Precision

Percentage of correctly predicted disease-causing missense variants out of all the predicted disease-causing variants

Recall

Percentage of correctly predicted disease-causing variants out of actual disease-causing variants

Area Under the Receiver Operating Characteristic (auROC)

auROC = 1represents a perfect classifiercorrectly identifies all pathogenic variants and no benign variants are classified as pathogenic

auROC = 0.5represents a model that performs no better than random guessing